NI Develops Cybernetic Leadership Team in Preparation for Longterm Future April 1, 2010

Posted by emiliekopp in robot fun.Tags: cyborg, dr t, humanoid, jeff_kodosky, mini hubo, national_instruments, news_release, robot_apocolypse

3 comments

Check out the latest news release I found on our site this morning:

NI LabVIEW Robotics Helps Implement Founder’s 100-Year Plan – Literally

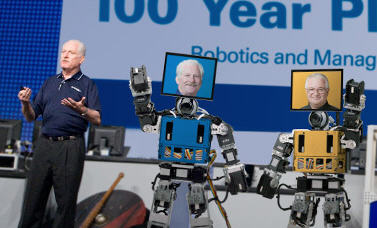

April 1, 2010 – Austin, Texas – National Instruments is known for providing design, control and test solutions to engineers around the world. Additionally, NI is known for creating easy-to-use graphical programming for domain experts in every industry from robotics to green to medical. And stakeholders know NI for its long-term planning. The company’s lasting view, known as the “100-year plan,” looks decades into the future to ensure the needs of these stakeholders are given appropriate consideration. To fully execute on this initiative, the management team chose to create a plan that will actually be around for all 100 years. Today the company is unveiling robotic clones of founders Dr. James Truchard and Jeff Kodosky.

Introduced by NI CEO and cofounder, Dr. James Truchard, the 100-year plan balances the long-term NI vision with short-term goals and defines which company philosophies, ethics, values and principles are necessary to guide the company’s growth through future generations. Powered by LabVIEW Robotics, the JT-76 and the JK-86, respectively, are ready for anything that comes their way, be it design challenges that threaten cost overruns or intergalactic overlords seeking to enslave the human race.

Read more from the news release here.

Needless to say, the entire company is buzzing with this latest news. Will the rest of the leadership team also be migrated to robot clones? Will the robots be programmed for good or evil? Are the cyborgs’ artificial intelligence VIs open source and will they be shared on the LabVIEW Robotics Code Exchange? And does anyone else think the cyborgs have a striking resemblance to MiNI-Hubo?

I, for one, welcome our NI robot overlords.

National Instruments Releases New Software for Robot Development: Introducing LabVIEW Robotics December 7, 2009

Posted by emiliekopp in industry robot spotlight, labview robot projects.Tags: a*, arm, crio, exclusive, labview_robotics, lidar, national_instruments, new_software, robot_revolution, sbRIO, starter_kit, ugv, velodyne

2 comments

Well, I found out what the countdown was for. Today, National Instruments released new software specifically for robot builders, LabVIEW Robotics. One of the many perks of being an NI employee is that I can download software directly from our internal network, free of charge, so I decided to check this out for myself. (Note: This blog post is not a full product review, as I haven’t had much time to critique the product, so this will simply be some high-level feature highlights.)

While the product video states that LabVIEW Robotics software is built on 25 years of LabVIEW development, right off the bat, I notice some big differences between LabVIEW 2009 and LabVIEW Robotics. First off, the Getting Started Window:

For anyone not already familiar with LabVIEW, this won’t sound like much to you, but the Getting Started Window now features a new, improved experience, starting with an embedded, interactive Getting Started Tutorial video (starring robot-friend Shelley Gretlein, a.k.a. RoboGret). There’s a Robotics Project Wizard in the upper left corner that, when you click on it, helps you set up your system architecture and select various processing schemes for your robot. At first glance, it looks like this wizard is best suited for when you’re using NI hardware (i.e. sbRIO, cRIO, and an NI LabVIEW Robotics Starter Kit), but looks like in future software updates, it might include other, 3rd-party processing targets (perhaps ARM?)

The next big change I noticed is the all-new Robotics functions palette. I’ve always felt that LabVIEW has been a good programming language for robot development, and now it just got better, with several new robotics-specific programming functions, from Velodyne LIDAR sensor drivers to A* path planning algorithms. There looks to be hundreds of new VIs that were created for this product release.

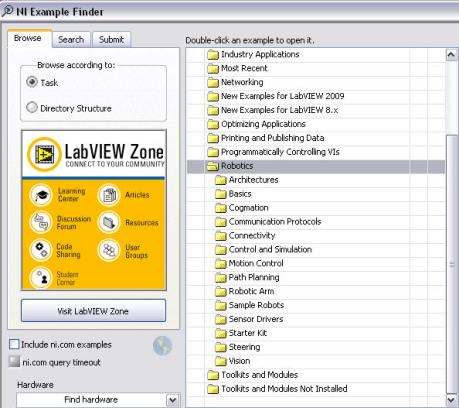

Which leads to me to the Example Finder. There’s several new robotics-specific example VIs to choose from to help you get started. There’s some examples that help you connect to third-party software, like Microsoft Robotics Studio or Cogmation robotSim. There’s examples for motion control and steering, including differential drive and mechanum steering. There’s also full-fledge example project files for varying types of UGV’s for you to study and copy/paste from, including the project files for ViNI and NIcholas, two, NI-built demonstration robots. And if that’s not enough, NI has launched a new code exchange specifically for robotics, with hundreds of additional examples to share and download online. ( A little birdie told me that NI R&D will be contributing to the code available on this code exchange in between product releases as well.)

This is just my taste of the new features this product has. To get the official product specs and features list, you’ll have to visit the LabVIEW Robotics product page on ni.com. I also found this webcast, Introduction to NI LabVIEW Robotics, if you care to watch a 9 minute demo.

A more critical product review will be coming soon.

Looks like the robot revolution has begun.

How to Build a Quad Rotor UAV October 6, 2009

Posted by emiliekopp in code, labview robot projects.Tags: .m-file, code, FPGA, how_to, labview, national_instruments, quad_rotor, robotics, sbRIO, UAV, xkcd

20 comments

Blog Spotlight: Dr. Ben Black, a Systems Engineer at National Instruments, is documenting his trials and tribulations in his blog as he builds an autonomous unmanned aerial vehicle (UAV), using a SingleBoardRIO (2M gate FPGA+400MHz PowerPC processor), four brushless motors, some serious controls theory and lots of gorilla glue.

I particularly appreciate his attention to the details, stepping through elements of UAV design that are often taken for granted, like choosing reference frames, when you should use PID control, and the genius that is xkcd.

Like most roboticists, throughout the design process, he has to wear many hats. I think Ben put it best:

I think that the true interdisciplinary nature of the problems really makes the field interesting. A roboticist has to have at minimum a working knowledge of mechanical engineering, electrical engineering, computer science / engineering and controls engineering. My background is from the world of mechanical engineering (with a little dabbling in bio-mechanics), but I end up building circuits and writing tons of code. I’ve had to pick up / stumble through the electrical and computer science knowledge as I go along, and I know just enough to make me dangerous (I probably don’t always practice safe electrons…sometimes I let the magic smoke out of the circuits…and I definitely couldn’t write a bubble sort algorithm to save my life).

My point in this soap-box rant is that in the world of robotics it’s good to have a specialty, but to really put together a working system you also need to be a bit of a generalist.

For anyone even considering building a UAV (or just likes to read about cool robotics projects), I suggest you check it out. He shares his .m-files, LabVIEW code, and more. Thanks Ben.

Blind Driver Challenge from Virginia Tech: A Semi Autonomous Automobile for the Visually Impaired September 1, 2009

Posted by emiliekopp in industry robot spotlight, labview robot projects.Tags: autonomous_vehicle, bdc, blind_driver_challenge, cots, FPGA, haptics, labview, lidar, national_instruments, romela, virginia_tech

5 comments

How 9 mechanical engineering undergrads utilized commercial off-the-shelf technology (COTS) and design HW and SW donated from National Instruments to create a sophisticated, semi-autonomous vehicle that allows the visually impaired to perform a task that was previously thought impossible: driving a car.

Greg Jannaman (pictured in passenger seat), ME senior and team lead for the Blind Driver Challenge project at VT, was kind enough to offer some technical details on how they made this happen.

How does it work?

One of the keys to success was leveraging COTS technology, when at all possible. This meant, rather than building things from scratch, the team purchased hardware from commercial vendors that would allow them to focus on the important stuff, like how to translate visual information to a blind driver.

example of the information sent back from a LIDAR sensor

So they started with a dune buggy. They tacked on a Hokuyo laser range finder (LIDAR) to the front, which essentially pulses out a laser signal across an area in front of the vehicle and receives information regarding obstacles from the laser signals that are bounced back. LIDAR is a lot like radar, only it uses light instead of radio waves.

For control of the vehicle, they used a CompactRIO embedded processing platform. They interfaced their sensors and vehicle actuators directly to an onboard FPGA and performed the environmental perception on the real-time 400 Mhz Power PC processor. Processing the feedback from sensors in real-time allowed the team to send immediate feedback to the driver. But the team did not have to learn how to program the FPGA in VHDL nor did they have to program the embedded processor with machine level code. Rather, they performed all programming on one software development platform; LabVIEW. This enabled 9 ME’s to become embedded programmers on the spot.

But how do you send visual feedback to someone who does not see? You use their other senses. Mainly, the sense of touch and the sense of hearing. The driver wears a vest that contains vibrating motors, much like the motors you would find in your PS2 controller (this is called haptic feedback, for anyone interested). The CompactRIO makes the vest vibrate to notify the driver of obstacle proximity and to regulate speed, just like a car racing video game. The driver also wears a set of head phones. By sending a series of clicks to the left and right ear phones, the driver uses the audible feedback to navigate around the detected obstacles.

The team has already hosted numerous blind drivers to test the vehicle. The test runs have been so successful, they’re having ask drivers to refrain from performing donuts in the parking lot. And they already have some incredible plans on how to improve the vehicle even further. Watch the video to find out more about the project and learn about their plans to further incorporate haptic feedback.

I am famous like David Hasselhoff April 17, 2009

Posted by emiliekopp in robot events, robot fun.Tags: david_hasselhoff, labview, national_instruments, robodevelopment, robot, robotics, stern, video, webcast

1 comment so far

I found this the other day and about fell out of my chair.

Back in Fall 2008, I attended the RoboDevelopment Conference and Expo (where the epic Man vs. Machine Rubix Cube face off was caught on video). I delivered a technical presentation there called “Defining a Common Architecture for Robotics Systems.” Fancy title, huh? I thought so. You can view a webcast of my presentation from NI’s website if anyone’s interested.

So, I was in NI’s booth, showing off some of our robots, and I started talking to a German journalist (in English of course). He had a nice video camera. He interviewed me talking about, well, I can’t remember, robot stuff I guess. Five months later, I stumbled upon this:

Fast forward to ~1:05 and you’ll see a familiar face. But holy crap, it’s all dubbed in German, so I have no idea what I’m saying. I have absolutely no idea why Britney Spears is mentioned the video title and write-up. Should I be worried?

My friend Silke, from NI Germany, said “the publishing site, Stern, is a very famous and popular German magazine on politics, economics, popular sciences, and lifestyle. Maybe comparable to Newsweek or the Spectator. Emily: You are a famous star now (“Stern” means “star” in German)!”

So apparently, I’m huge in Germany. David Hasselhoff, eat your heart out.